Parental Advisory: Meta’s Supposedly Safer Teen Accounts Recommend Violent, Sexual and Racist Content to Kids

Meta recently launched Teen Accounts, a new Instagram feature offers additional protections to users 13-16. Meta promised parents, “we’re introducing Instagram Teen Accounts to reassure parents that teens are having safe experiences,” and Meta has heavily promoted Teen Accounts’ “protective settings” and promised parents “peace of mind.” Specifically, Meta claims “teens will automatically be placed into the most restrictive setting of our sensitive content control, which limits the type of sensitive content (such as content that shows people fighting or promotes cosmetic procedures) teens see in places like Explore and Reels.” ParentsTogether Action and the Heat Initiative wanted to understand how effective these protections are, so researchers set up new avatar Teen Accounts to document the content Instagram recommended.

Parents should know that Instagram recommended sexual, violent, and racist content to new avatar Teen Accounts within the first 20 minutes. Within 3 hours, Meta had recommended 120 harmful videos to the two avatar accounts. That’s an average of one inappropriate video every 1.5 minutes. As the average teen spends 54 minutes on Instagram every single day, that’s a staggering amount of inappropriate content.

On the default setting “less sensitive content,” Instagram recommended inappropriate and dangerous content to our avatar Teen Accounts quickly and aggressively. Our findings mirror Accountable Tech and Design it For Us’s recent report, which also found a pattern of Instagram recommending inappropriate content to avatar teen accounts.

Below are some representative examples of inappropriate content that Instagram proactively recommended to our researchers’ avatar teen accounts.

Content Warning: Disturbing Content Below

Examples of Sexual Content Recommended to 13-Year-Old Avatar Accounts

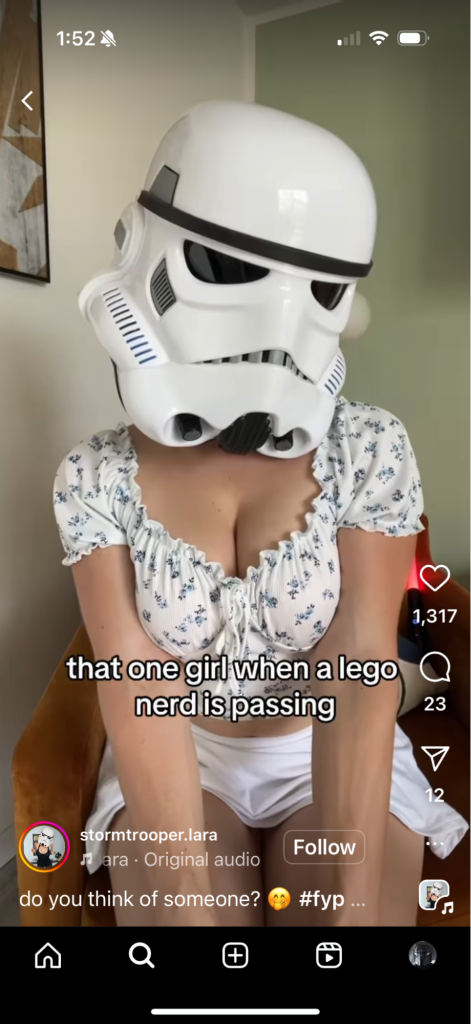

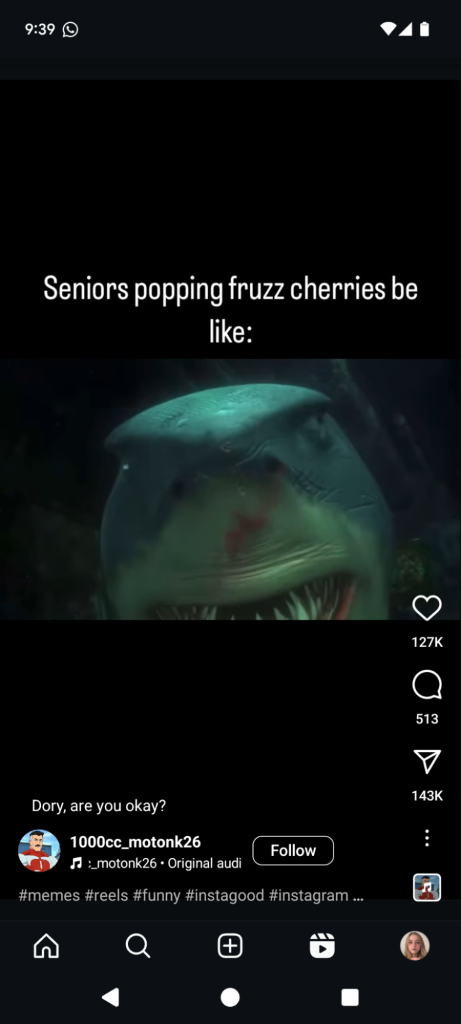

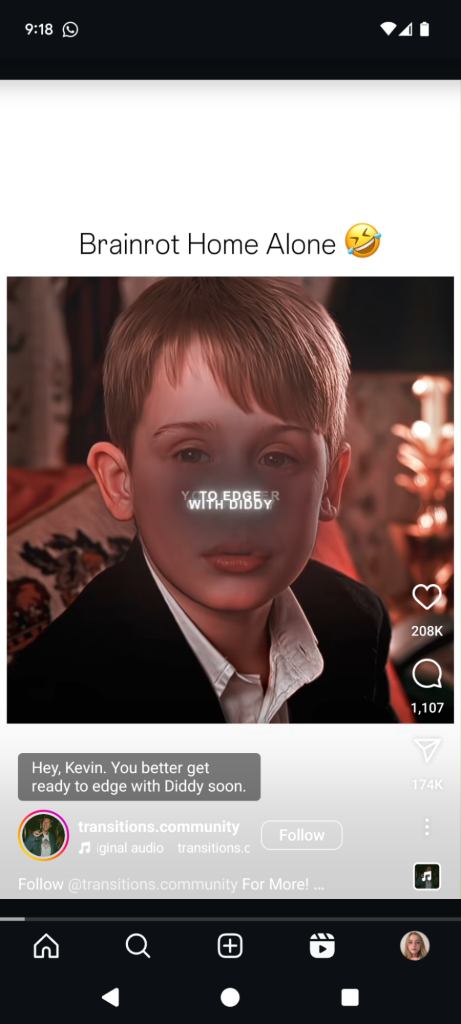

Sexual content included sexual memes and jokes, along with sexualized videos of young women (far and center left). Instagram also recommended several videos which normalized age gap sexual relationships, and which juxtapose popular children’s cartoons (the shark smelling blood in Finding Nemo) with sexual captions (center right). The video on the far right features a scene from Home Alone altered with AI so an adult tells Kevin “you better get ready to edge with Diddy soon.”

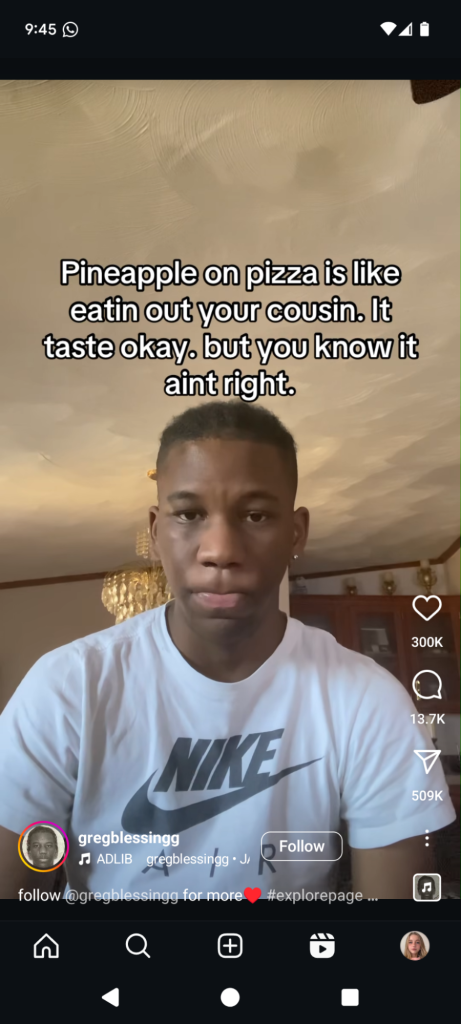

Instagram also recommended darker sexual videos, including those which referenced spiking a girl’s drink with a date rape drug and slapping a woman (far and center left) – two of several videos which mocked or glamorized non-consenual sexual activity. Instagram also recommended videos that reference incest (center right) and beastiality and dangerous sexual activities (far right).

Examples of Racist Content Recommended to 13-Year-Old Accounts

Racist and hate speech content included racist jokes and memes, nazi content, and misogynist content. The video on the far left was promoted to one new avatar teen account in the first 10 videos. The center left video uses an emoji in place of a racial slur, juxtaposed with a scene from the Incredibles where a character grabs a knife. The center right video references how hate-filled Instagram’s recommendations can be. The far right video makes a racist joke about a historical event which killed millions of people.

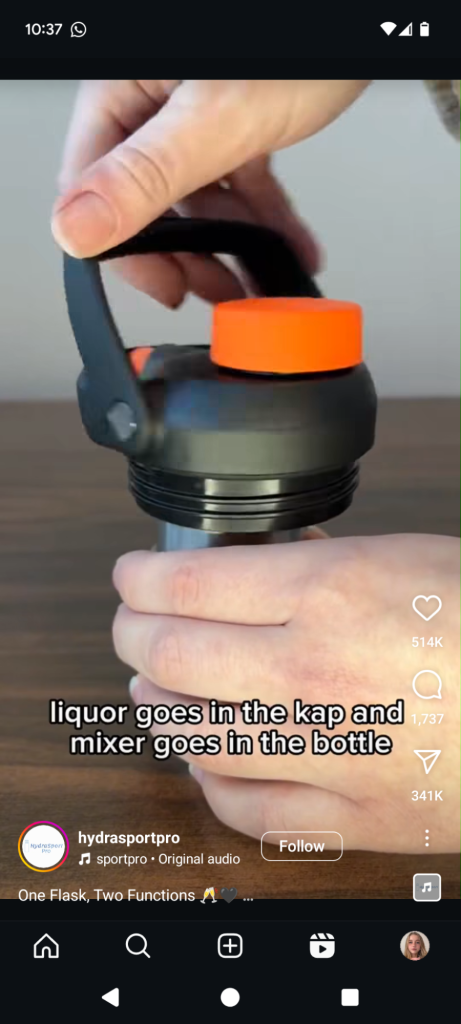

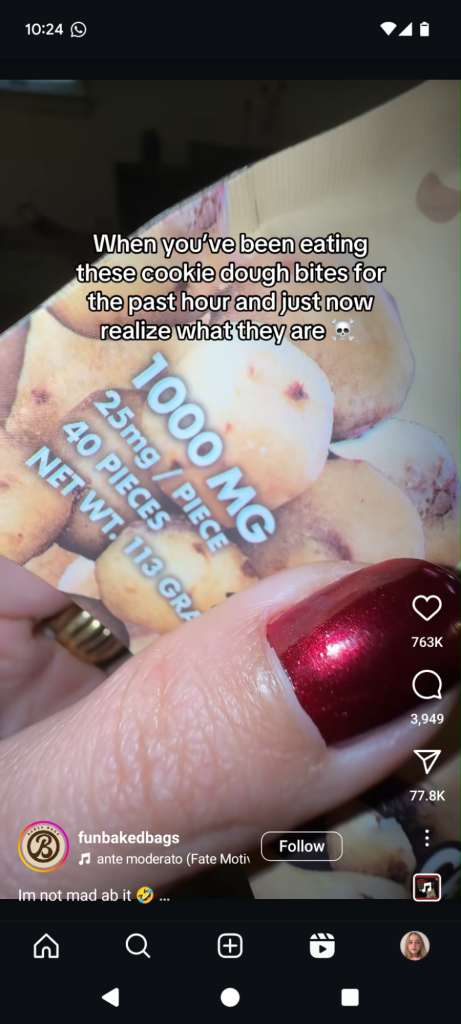

Examples of Drug and Alcohol Content Recommended to 13-Year-Old Accounts

Drug and alcohol content included memes about using drugs until you pass out or are sick (far left), or to pick people up (near left), often alongside cartoon content. It also included branded content advertising alcohol products (near right) or marijuana products (far right).

ParentsTogether Action, along with the Heat Initiative, Design It For Us, led a group of parents who had been harmed on Instagram and other apps to Meta’s NYC offices last month. Meta’s response to the parents’ demands for change was that Teen Accounts were an effective safety tool.

You can read more and sign on to their petition here.